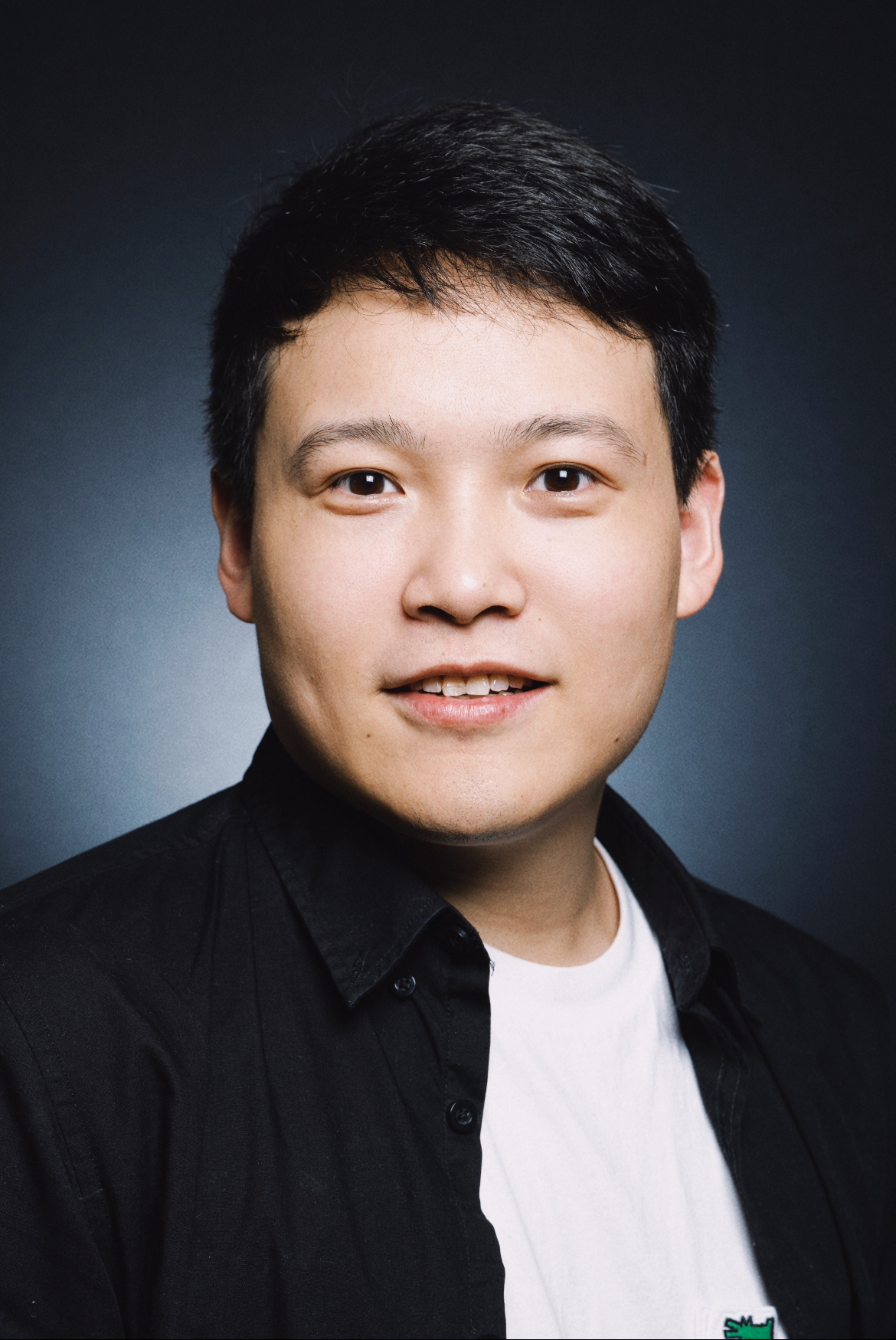

Jindong Gu

Senior Research Scientist, Google

Senior Research Fellow, University of Oxford

I am a Senior Research Scientist at Google, specializing in advancing the reliability and safety of AI technologies. I am also affiliated with the University of Oxford as a Senior Research Fellow. Previously, I focused on ensuring the safety of foundation models within the Gemini Safety team of DeepMind. Currently, my work centers on enhancing the reliability and robustness of AI agents in CAIR team.

I received my Ph.D. in 2022 from the Tresp Lab at the University of Munich, Germany, advised by

My research goal is to build Responsible AGI. Specifically, I am interested in the interpretability, robustness, privacy, and safety of

Visual Perception,

Visual Perception,  Foundation Model-based Understanding and Reasoning,

Foundation Model-based Understanding and Reasoning,  Robotic Policy and Planning,

Robotic Policy and Planning,  and their Fusion towards General Intelligence Systems (Embodied Agent).

and their Fusion towards General Intelligence Systems (Embodied Agent).

[Hiring!] If you like to work with me as a Research Intern, please drop me an email, or contact me on LinkedIn or X. I speak English, Chinese, and German.

News

- 11 / 2025: Outstanding Paper Award in LASS Workshop @ CIKM 2025.

- 11 / 2025: Invited to serve as Area Chair in ICML 2026

- 09 / 2025: 4 papers are accepted in NeurIPS 2025, 2 main + 2 D&B Track

- 09 / 2025: Best Paper Award in DDLI Workshop @ IJCAI 2025.

- 09 / 2025: Invited to serve as Area Chair in ICLR 2026

- 08 / 2025: 4 papers are accepted in EMNLP main 2025 + 1 Findings.

- 07 / 2025: Invited to serve as Area Chair in AAAI 2026 Alignment Track

- 06 / 2025: Best Paper Award in Responsible GenAI Workshop @ CVPR 2025.

- 06 / 2025: Invited Talk on Multimodal LLM in UNCV Workshop @ CVPR 2025.

- 06 / 2025: Gemini 2.5 Technical Report Released! Glad to be one of the contributors.

- 05 / 2025: 4 papers are accepted in ACL main 2025.

- 04 / 2025: Invited to serve as Area Chair in NeurIPS 2025.

- 02 / 2025: 4 papers on Safety and Multimodal Understanding are accepted in CVPR 2025.

- 12 / 2024: Two workshop proposals (Test-time Scaling for CV and Eval-FoMo) accepted in CVPR 2025.

- 11 / 2024: Invited Talk at PFATCV Workshop and MediaTrust Workshop in BMVC 2024.

- 10 / 2024: I co-organize Eval-FoMo Workshop and give a talk in GigaVision Workshop in ECCV 2024.

- 07 / 2024: Six papers on AI Safety & Privacy are accepted to ECCV 2024.

- 07 / 2024: Papers on AI Safety & Interpretability are accepted to ICML, COLM, and NeurIPS 2024.

- 06 / 2024: I will serve as Area Chair in NeurIPS 2024 Datasets and Benchmarks Track.

- 04 / 2024: Our survey paper on Adversarial Transferability is accepted to TMLR.

- 02 / 2024: Three papers on AI safety are accepted to CVPR 2024.

- 01 / 2024: Three papers on AI safety (two Spotlight) are accepted to ICLR 2024.

- 01 / 2024: Two Journal papers on AI safety is accepted to TIFS.

Previous News

- 12 / 2023: Three papers on AI safety and robustness are accepted to AAAI 2024.

- 11 / 2023: I started a part-time Faculty Scientist position at Google DeepMind.

- 10 / 2023: I was promoted to Senior Research Fellow at University of Oxford.

- 09 / 2023: One paper is accepted to BMVC 2023 and One Benchmark paper to NeurIPS 2023.

- 07 / 2023: Three papers on VLM Understanding and Robustness are accepted to ICCV 2023.

- 05 / 2023: Selected as CVPR 2023 Outstanding Reviewer.

- 05 / 2023: One paper has been accepted to ACL Findings 2023.

- 02 / 2023: One paper has been accepted to CVPR 2023.

- 11 / 2022: I join Torr Vision Group as Postdoctoral Researcher at University of Oxford.

- 08 / 2022: I join Google Responsible ML Team as a Research Intern.

- 07 / 2022: Four papers on Robustness of Vision Systems are accepted to ECCV 2022.

- 04 / 2021: I join Microsoft Research Asia as a Research Intern.

- 03 / 2021: One paper has been accepted as oral to CVPR 2021.

- 01 / 2021: One paper has been accepted to ICLR 2021.

Experience

- 11 / 2023 - present: Senior Research Scientist at Google Research and DeepMind, London, UK

- 10 / 2023 - present: Senior Researcher at University of Oxford, Oxford, UK

- 11 / 2022 - 09 / 2023: Postdoctoral Researcher at University of Oxford, Oxford, UK

- 08 / 2022 - 11 / 2022: Research Intern at Google, New York, USA

- 04 / 2021 - 02 / 2022: Research Intern at Microsoft Research Asia, Beijing, China

- 08 / 2020 - 03 / 2021: Research Intern at Tencent AI Lab, Shenzhen, China

- 09 / 2017 - 08 / 2020: Doctoral Researcher at Siemens Technology, Munich, Germany

Selected Publications

-

-

Responsible Generative AI: What to Generate and What Not [PDF]Under Review, 2024

-

Self-Discovering Interpretable Diffusion Latent Directions for Responsible Text-to-Image Generation [PDF][CODE]IEEE Conference on Computer Vision and Pattern Recognition (CVPR) , 2024

-

An Image Is Worth 1000 Lies: Transferability of Adversarial Images across Prompts on Vision-Language Models [PDF][CODE]International Conference on Learning Representations (ICLR) , 2024

-

European Conference on Computer Vision (ECCV) , 2022

-

SegPGD: An Effective and Efficient Adversarial Attack for Evaluating and Boosting Segmentation Robustness [PDF]European Conference on Computer Vision (ECCV) , 2022

-